Fine-Grained Cross-View Geo-Localization Using a Correlation-Aware Homography Estimator

Abstract

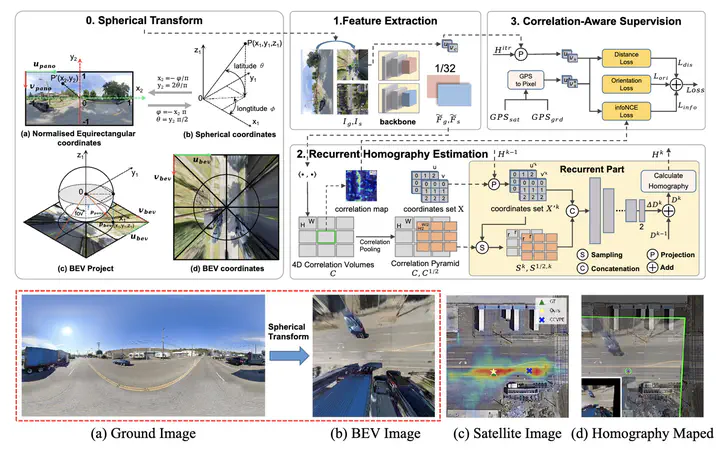

Accurately localizing ground cameras is crucial for applications like autonomous driving and geospatial analysis. Current works focus on comparing features extracted from ground and satellite images to estimate the ground camera’s 3-DoF pose (GPS location and orientation). However, they often involve significant time or space complexity due to the need to traverse candidate poses or generate multiple possible pose representations simultaneously. We propose to align a warped ground image with a corresponding GPS-tagged satellite image covering the same area using homography estimation. We leverage a differentiable spherical transform module to project ground images onto an aerial perspective, simplifying the problem to 2D image alignment. We introduce a correlation-aware homography estimation method to attain sub-pixel resolution and meter-level localization accuracy and achieve real-time performance at 30 FPS. Extensive experiments demonstrate significant improvements over previous state-of-the-arts, reducing the mean metric localization error by 21.3% and 34.4% in same-area generalization tasks on the VIGOR and KITTI benchmark, respectively.